Most workplace training systems still rely on a model borrowed from exams.

A learner selects an answer.

The system evaluates it.

If the answer is wrong, the learner sees a red indicator or a short message such as “Incorrect. Try again.”

Only after selecting the correct option is the learner allowed to proceed.

From a reporting perspective, this model appears efficient. Completion rates are easy to measure. Pass or fail is simple to record. Training dashboards look clean.

From a learning perspective, it is deeply flawed.

The dead-end problem in traditional e-learning

In traditional e-learning, incorrect responses function as dead ends. The system blocks progress until the learner finds the approved answer.

This design choice prioritizes administrative clarity over cognitive development. What gets measured is not understanding or capability, but compliance with the interface.

The platform tracks:

- clicks

- time spent

- completion status

- final answers

What it does not track is whether the learner understands why a decision matters, what would happen if that decision were made in reality, or how the learner reasons under uncertainty.

A person who guesses until the system turns green is treated the same as a person who carefully evaluates the situation and applies judgment. Both are marked complete.

In real work environments, this distinction is critical.

Why this model breaks down in real situations

Outside of training systems, incorrect decisions do not pause reality.

A poor cybersecurity choice does not prompt a retry screen. It can lead to a breach.

A mishandled customer interaction does not reset the conversation. It escalates.

A safety lapse does not allow a second attempt. It causes an incident.

Traditional e-learning strips decisions of their consequences. Learners never experience the downstream effects of their actions. As a result, they may know which answer is labeled correct without understanding why it matters.

This is why organizations often find that employees pass training yet continue to make costly mistakes.

What consequence-driven learning does differently

Consequence-driven learning starts from a different assumption.

Wrong answers are not failures to be erased. They are decisions that should lead somewhere.

Instead of stopping the exercise, a poor choice opens a new path that reflects a plausible real-world outcome. The learner continues inside the scenario, now dealing with the result of their own action.

This change affects learning in three fundamental ways.

Simulated real-world outcomes replace abstract feedback

Rather than displaying a correction message, consequence-driven systems simulate what happens next.

A cybersecurity mistake may lead to unauthorized access that must now be contained.

A weak compliance decision may trigger regulatory exposure.

A poor service response may cause the customer to escalate or disengage.

The learner is no longer told that a choice was wrong. They are shown what it causes.

Research in adult learning consistently shows that people internalize lessons more deeply when they experience outcomes rather than instructions. Consequence-driven learning applies this principle directly.

Reasoning becomes observable instead of hidden

Traditional training systems can only see the final selection. They cannot distinguish between guessing and reasoning.

Consequence-driven learning introduces structured reflection. Learners are asked to explain why they chose a particular action.

This surfaces decision-making patterns that are otherwise invisible:

- acting quickly to reduce effort

- prioritizing speed over accuracy

- following habit rather than policy

- making risk trade-offs without recognizing them

For instructors and organizations, this changes training from answer tracking to judgment analysis. It becomes possible to see not just what people chose, but how they think.

Adaptive navigation targets actual skill gaps

Most e-learning delivers identical content to everyone, regardless of where they struggle.

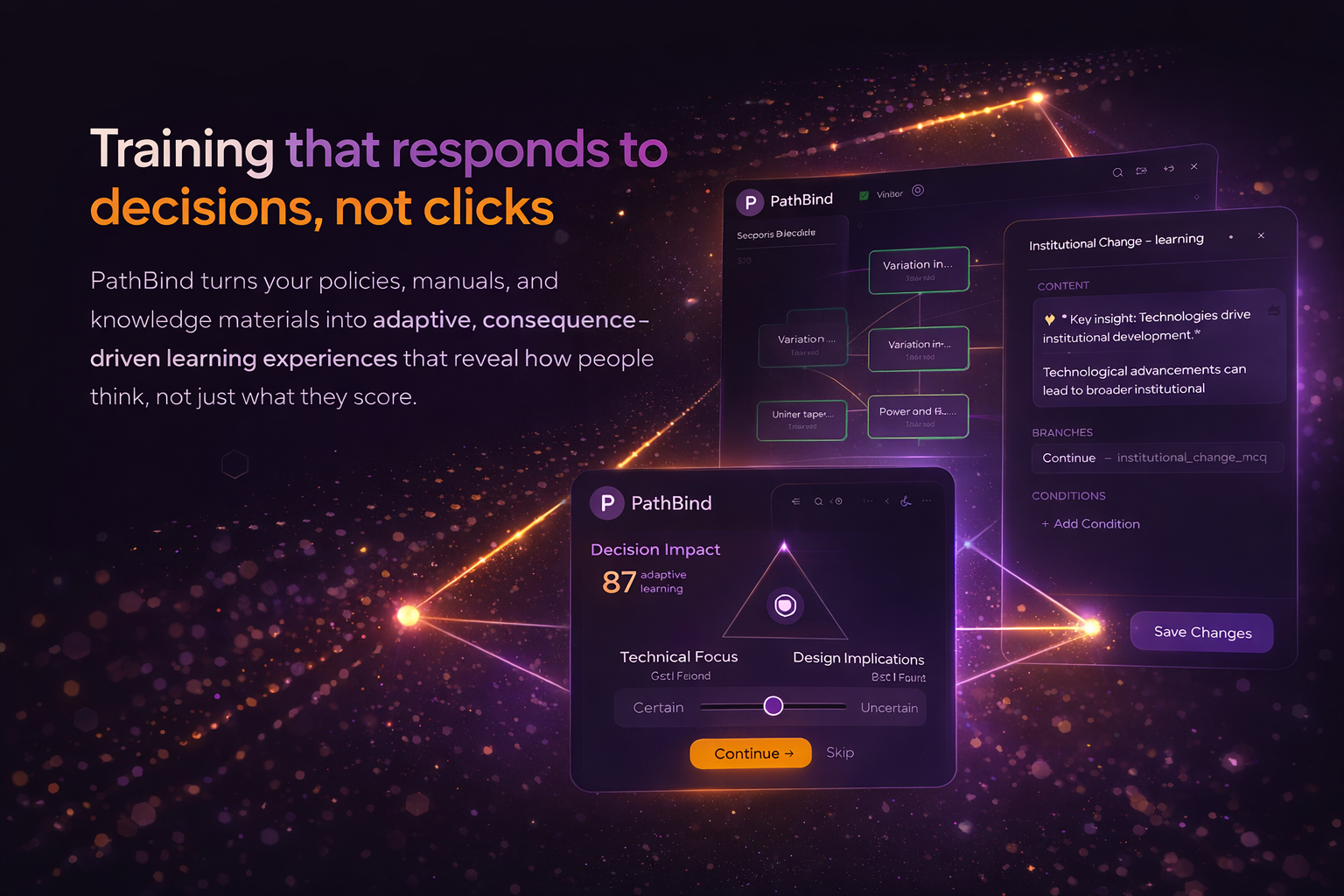

Consequence-driven systems use Bayesian skill tracking to update a learner’s proficiency estimates after each decision. The system builds a probabilistic model of what the learner understands and where uncertainty remains.

Scenarios then adapt automatically. If a learner consistently struggles with risk recognition, the system presents situations that focus on that weakness. If judgment improves, complexity increases.

This approach avoids both under-challenging and overwhelming learners. Training time is spent where it matters most.

Brick walls versus branching roads

The structural difference between traditional e-learning and consequence-driven learning is simple but profound.

Traditional e-learning is a brick wall. Progress stops until the correct door is found.

Consequence-driven learning is a branching road. Learners are allowed to take the wrong turn, see where it leads, and learn how to navigate back.

Judgment is built by navigating complexity, not by avoiding it.

Why this matters for training effectiveness

Organizations are increasingly required to demonstrate that training builds real capability, not just completion.

Completion metrics do not show preparedness. Correct answers do not prove judgment. Auditors, regulators, and leaders are asking harder questions about whether training actually reduces risk.

Training systems that treat mistakes as dead ends cannot answer those questions.

Consequence-driven learning can. It captures decisions, reasoning, and adaptive progression in a way that aligns training with real-world behavior.

That alignment is what makes learning stick.